Depository: Trash in Mixed Reality

Collaborator: Stephanie Cedeño2017 / 4-week project

CATEGORIES: UX, interaction, speculative, design research

KEYWORDS: augmented reality (AR), mixed reality (MR)

Using the Microsoft Hololens and Unity, my collaborator and I proposed a set of new interactions for dealing with (and living with) discarded or inactive digital objects in Mixed Reality. Our prototypes centered on the question:

How do we “take out the trash” in mixed reality?

PROJECT OVERVIEW

Depository: Trash in Mixed Reality focuses on a seemingly mundane subject—one that on first glance doesn’t have a place in virtual or augmented reality—the trash.

PROJECT BRIEF: Everyday Immersions

Depository concluded 4-week studio that began with a technical and conceptual deep dive into VR and AR technologies. The project was developed in response to the following prompt:

As VR and AR technologies become increasingly ubiquitous and cultural acceptance grows how might these technologies begin to permeate through our world, making immersive experiences more routine – like putting on a watch or diving in for a 'special' occasion? How will these emerging technologies redefine our everyday experiences?

Phase 01: PRIMARY RESEARCH

We began the project with on-the-ground research, simultaneously:

- Getting insights from user research to see what it is like to deal with trash and clutter in different kinds of households;

- Conducting technical research using Microsoft Hololens as an augmented reality device.

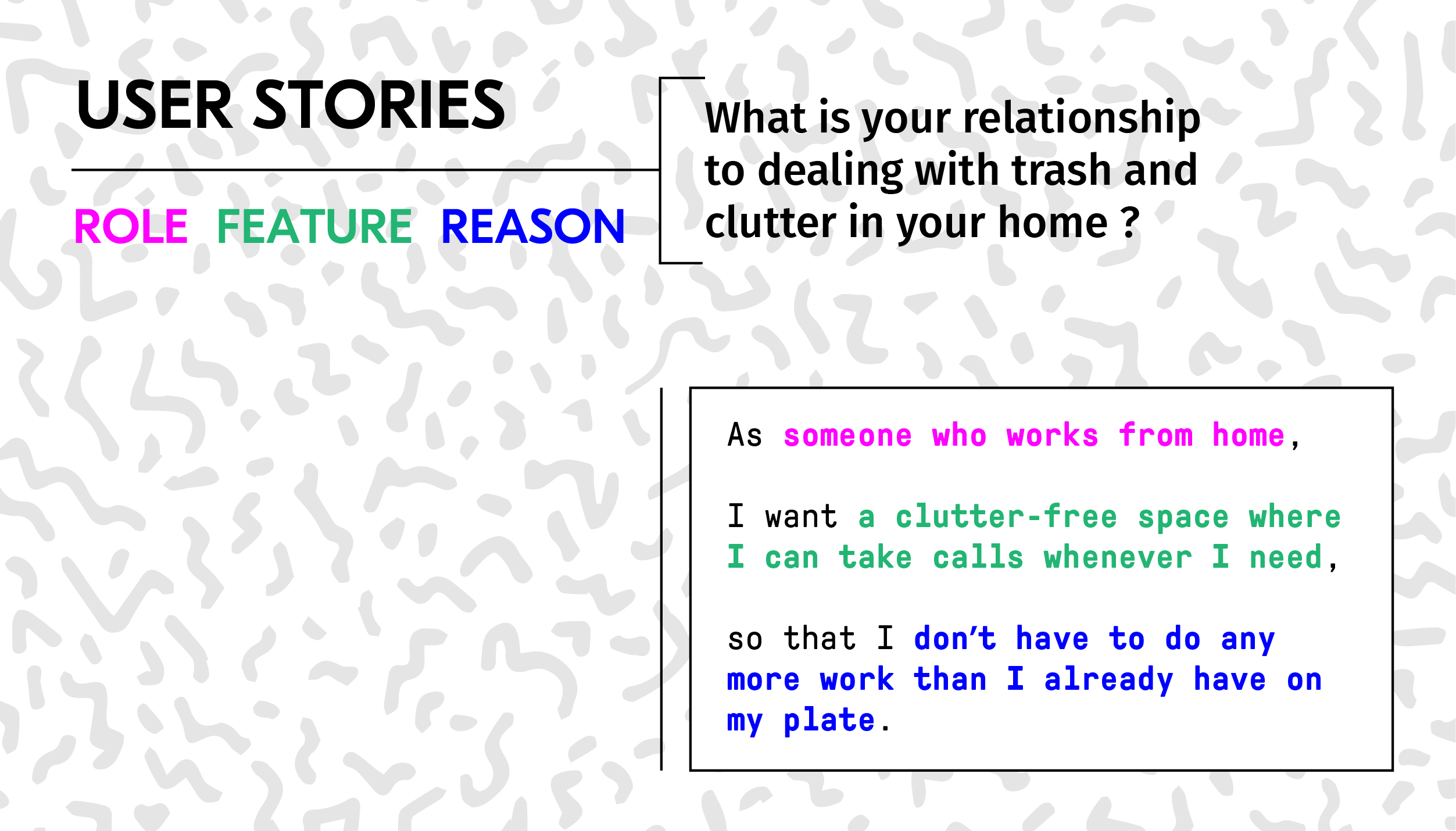

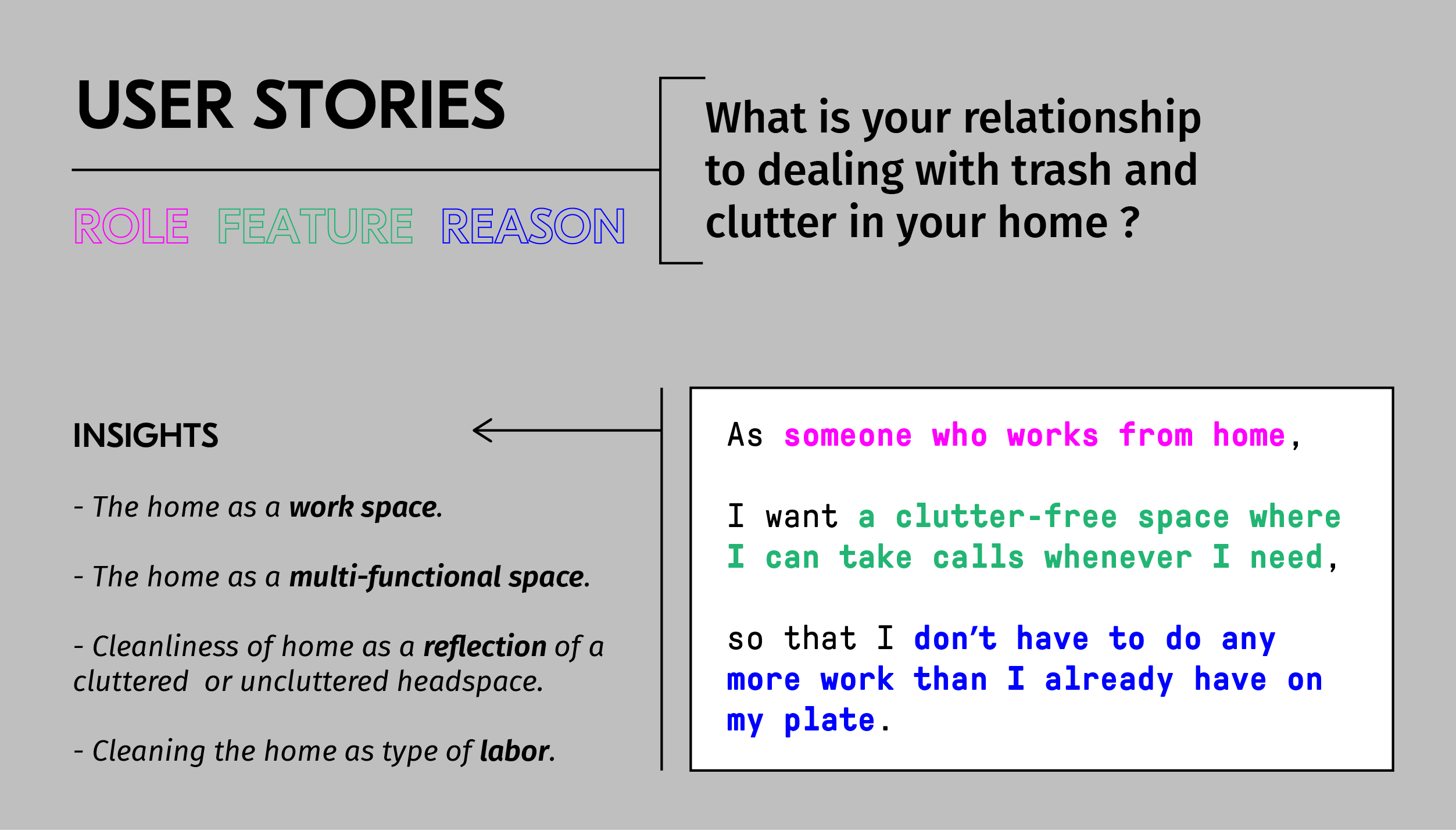

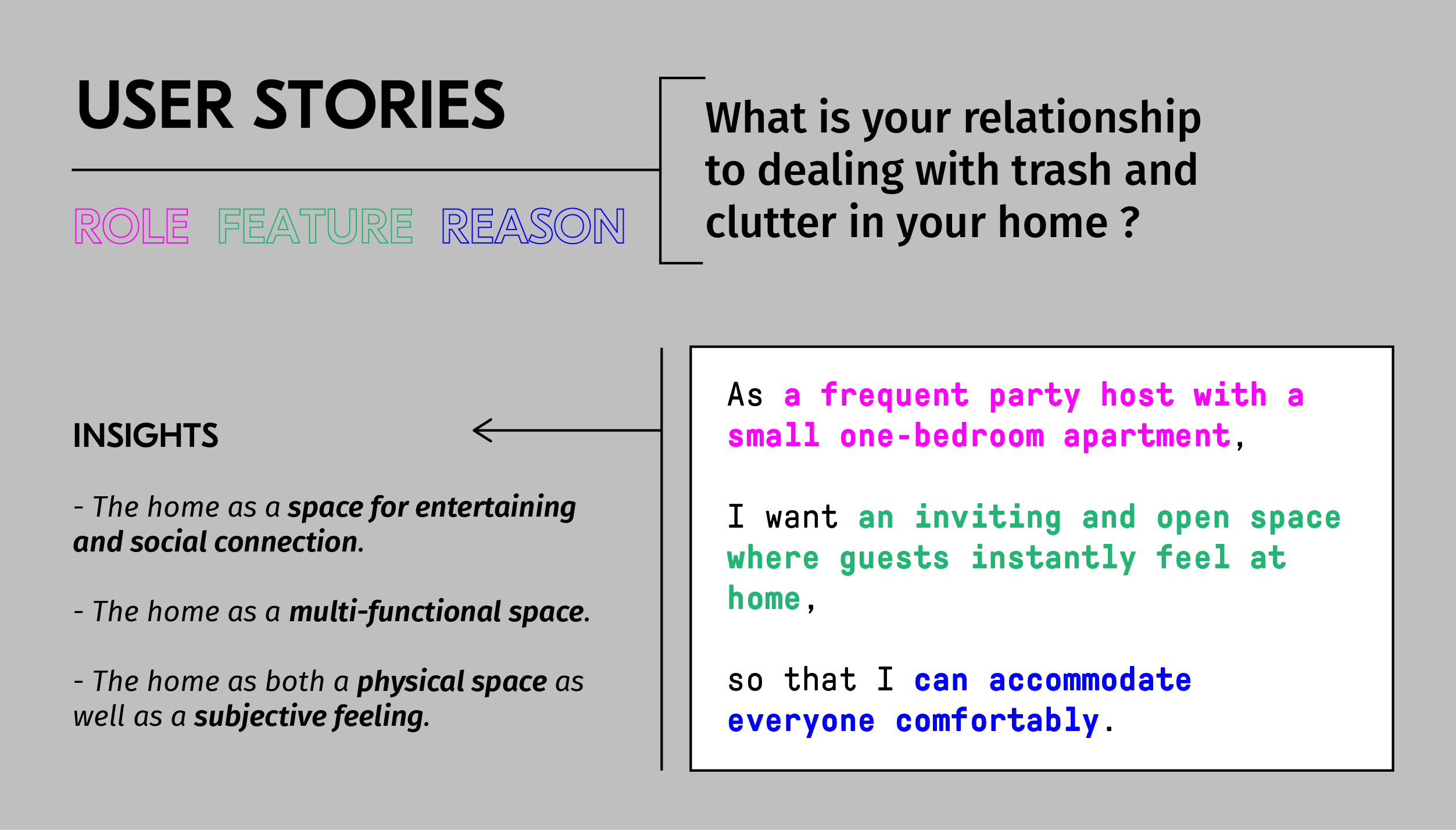

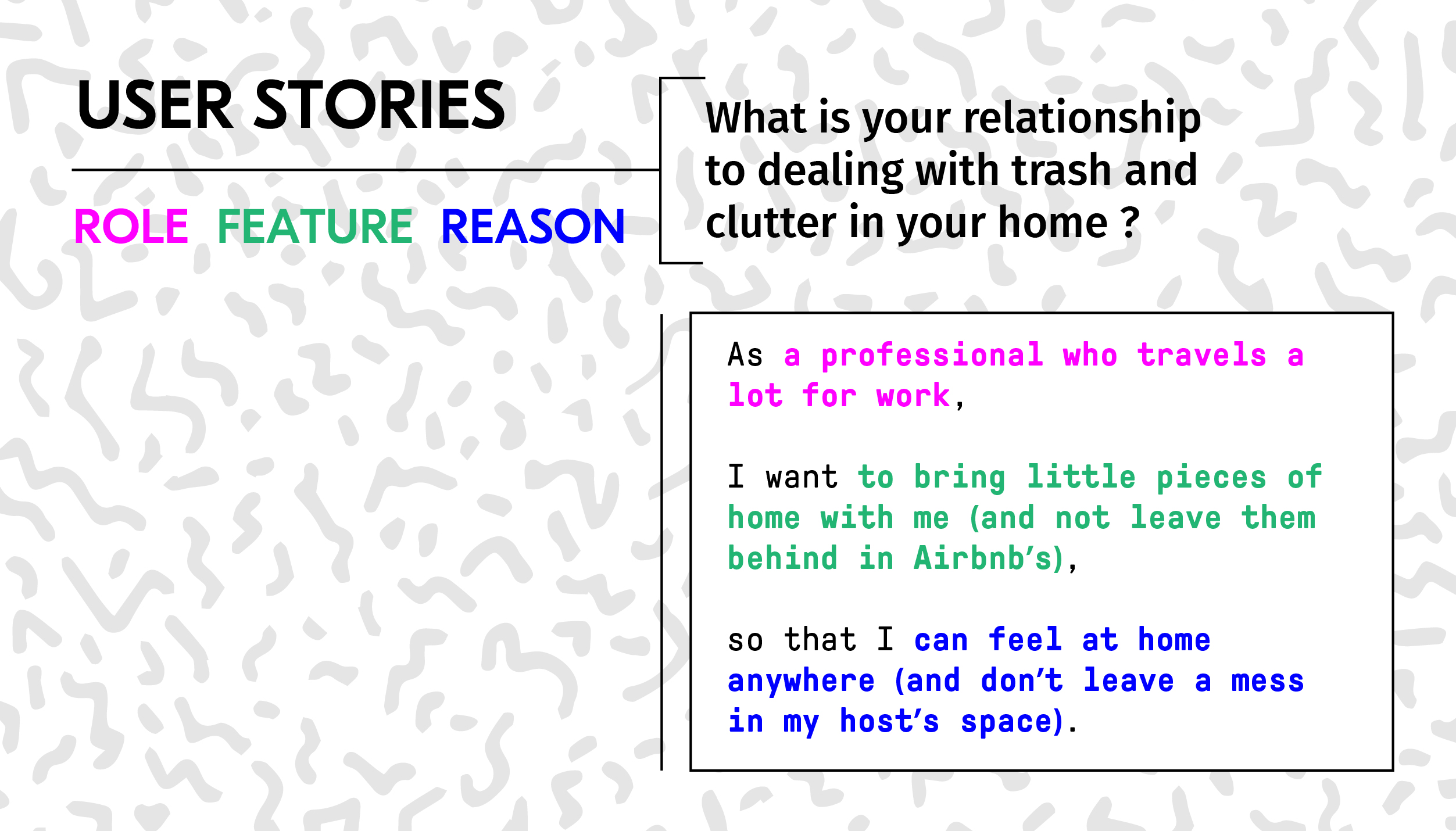

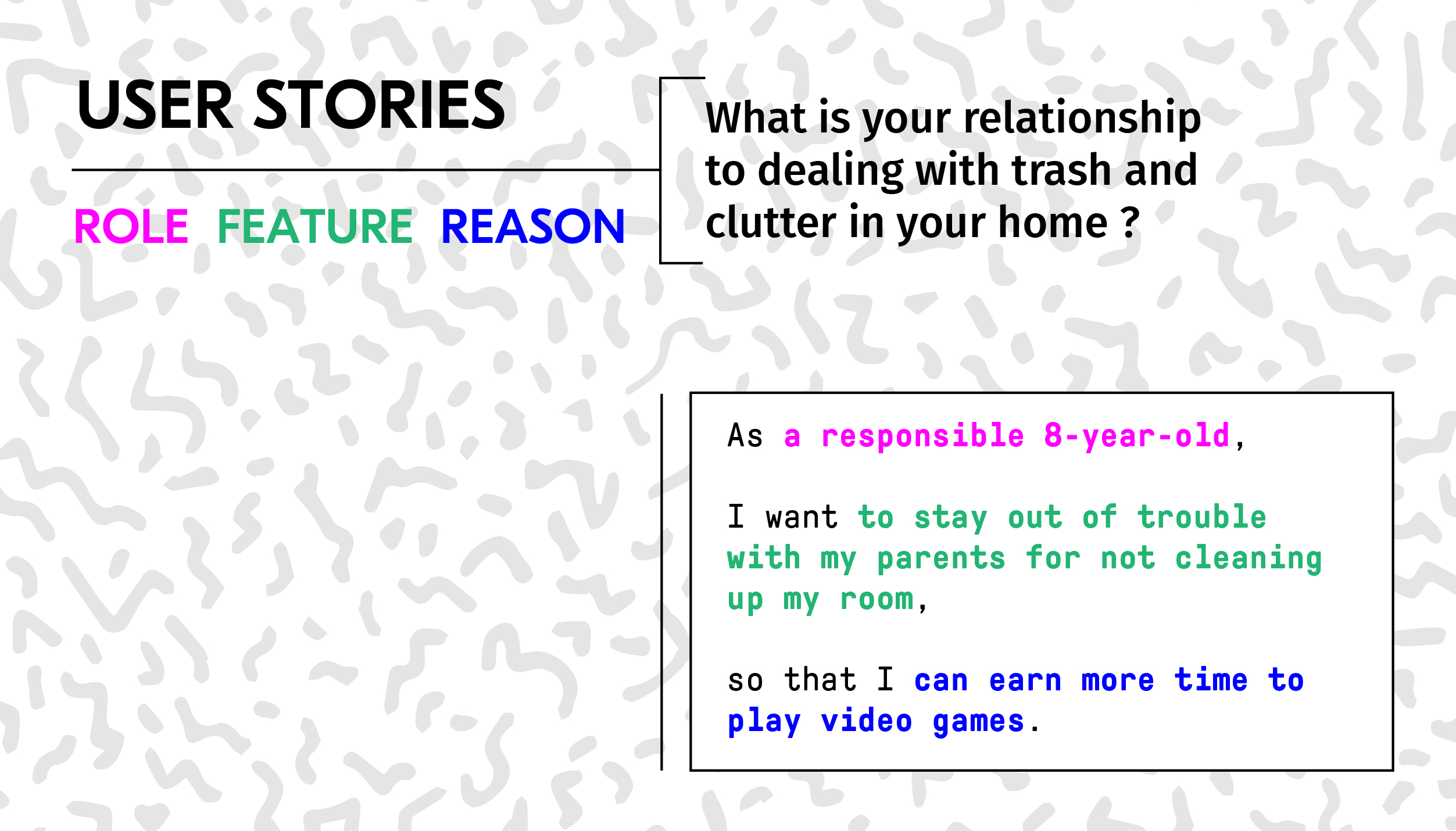

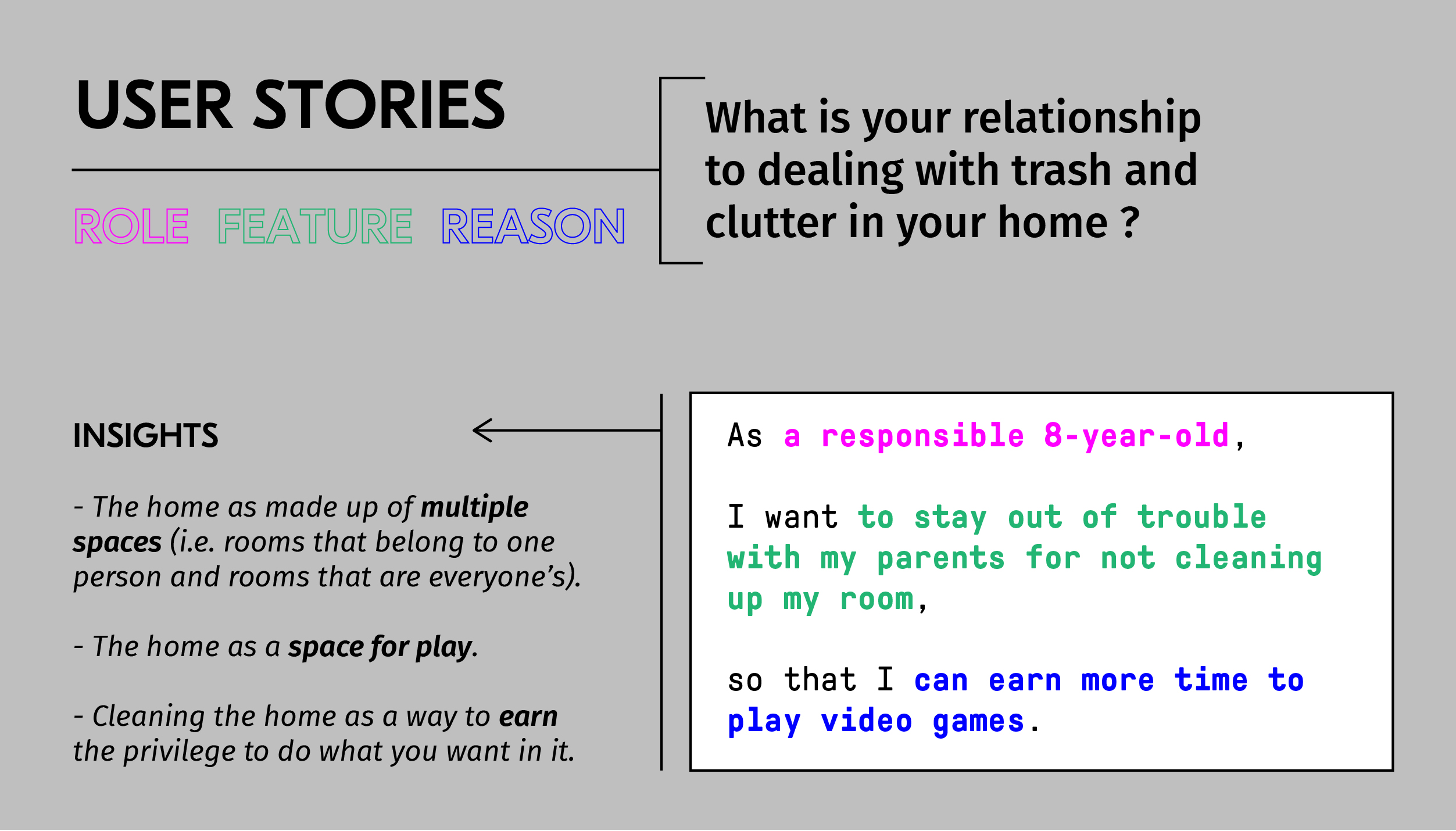

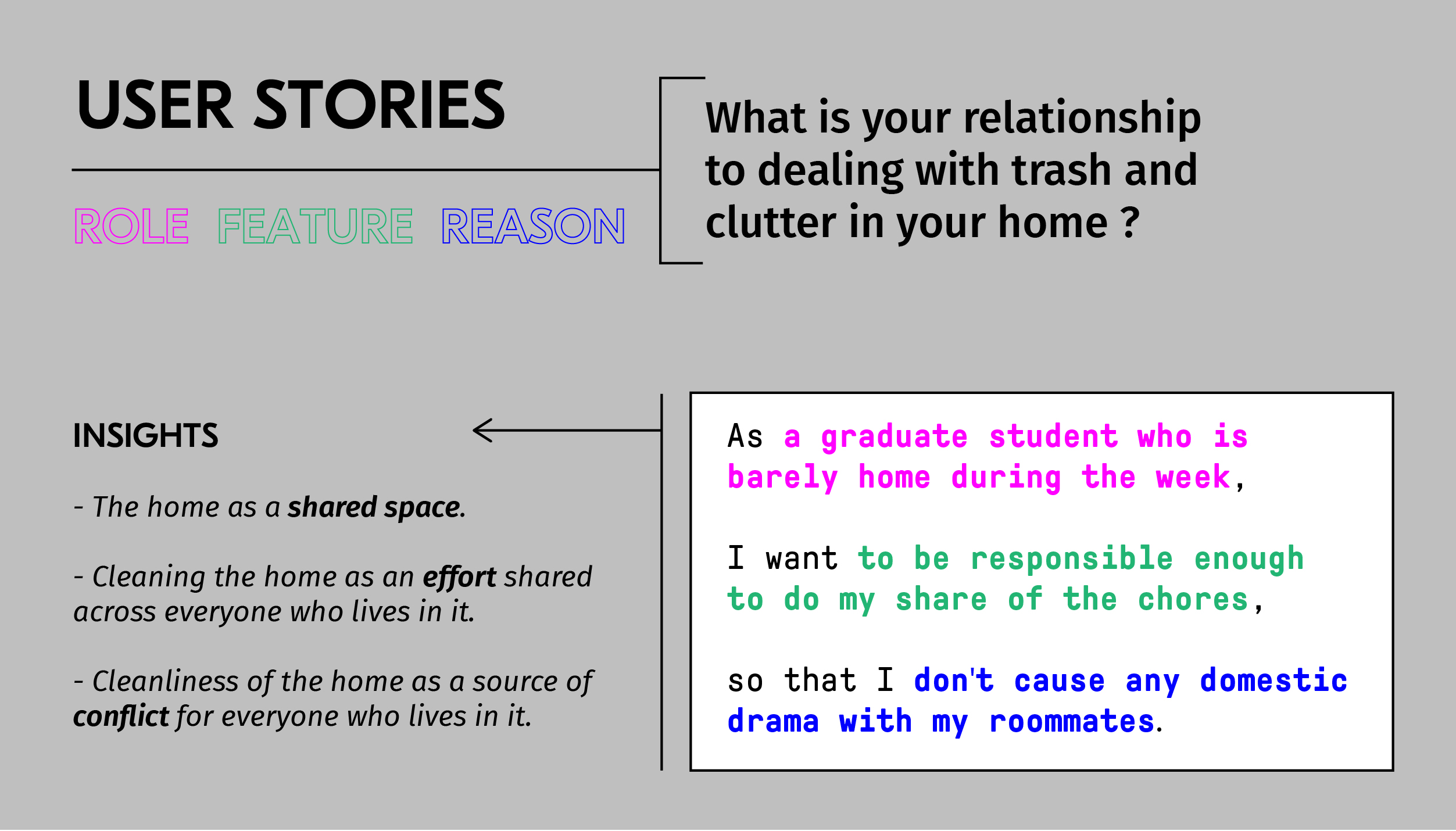

↳ Selected user stories that we synthesized from our interviews.

USER RESEARCH

Although interviewing multiple people who live with a Microsoft Hololens in their home was not exactly an option for us...we knew that we could gather similar insights by interviewing people about their relationship with trash and clutter (both physical and digital) in their domestic spaces.

↳ Initial concept collage for thinking about trash and clutter in Mixed Reality — will it become a new kind of “junkspace”?

TECHNICAL RESEARCH

We started this phase by simply getting used to being in the headset, summoning up holograms and apps. It became difficult to keep track of where we put everything, which led us to question: How easy or difficult is it to “make a mess” using the Microsoft Hololens? You might think that you’ve deleted all of your holograms, but then you put on your headset and see a raptor in the kitchen that you don’t remember putting there…

↳ Investigating the “squishy grid” and spatial memory of the Microsoft Hololens.

In the course of “making a mess” with the Hololens, we gathered two main insights:

1. SPATIAL MAPPING + THE SQUISHY GRID:

Hololens saves spaces. We once put on the headset and noticed that the Hololens was showing the grid of a previously mapped physical space that we were not currently in. We saw a chair mapped in the “squishy grid” but in reality, there was no chair there. This was an unsettling, spatially disorienting experience; however, we latched onto a “site specificity” inherent to AR technologies.

1. SPATIAL MAPPING + THE SQUISHY GRID:

Hololens saves spaces. We once put on the headset and noticed that the Hololens was showing the grid of a previously mapped physical space that we were not currently in. We saw a chair mapped in the “squishy grid” but in reality, there was no chair there. This was an unsettling, spatially disorienting experience; however, we latched onto a “site specificity” inherent to AR technologies.

2. PROGRAMMED DESIRES:

Holograms and other AR assets don’t behave in exactly the same way that physical objects in the “real world” do. Whereas physical objects are subject to gravity and usually stay where a person leaves them, digital objects have a programmed desire to settle on architectural edges and walls. Many other 2D and 3D design tools also have this “snap-to-grid” affordance for interfaces, but it is often not something witnessed in the physical world.

![]()

![]()

Holograms and other AR assets don’t behave in exactly the same way that physical objects in the “real world” do. Whereas physical objects are subject to gravity and usually stay where a person leaves them, digital objects have a programmed desire to settle on architectural edges and walls. Many other 2D and 3D design tools also have this “snap-to-grid” affordance for interfaces, but it is often not something witnessed in the physical world.

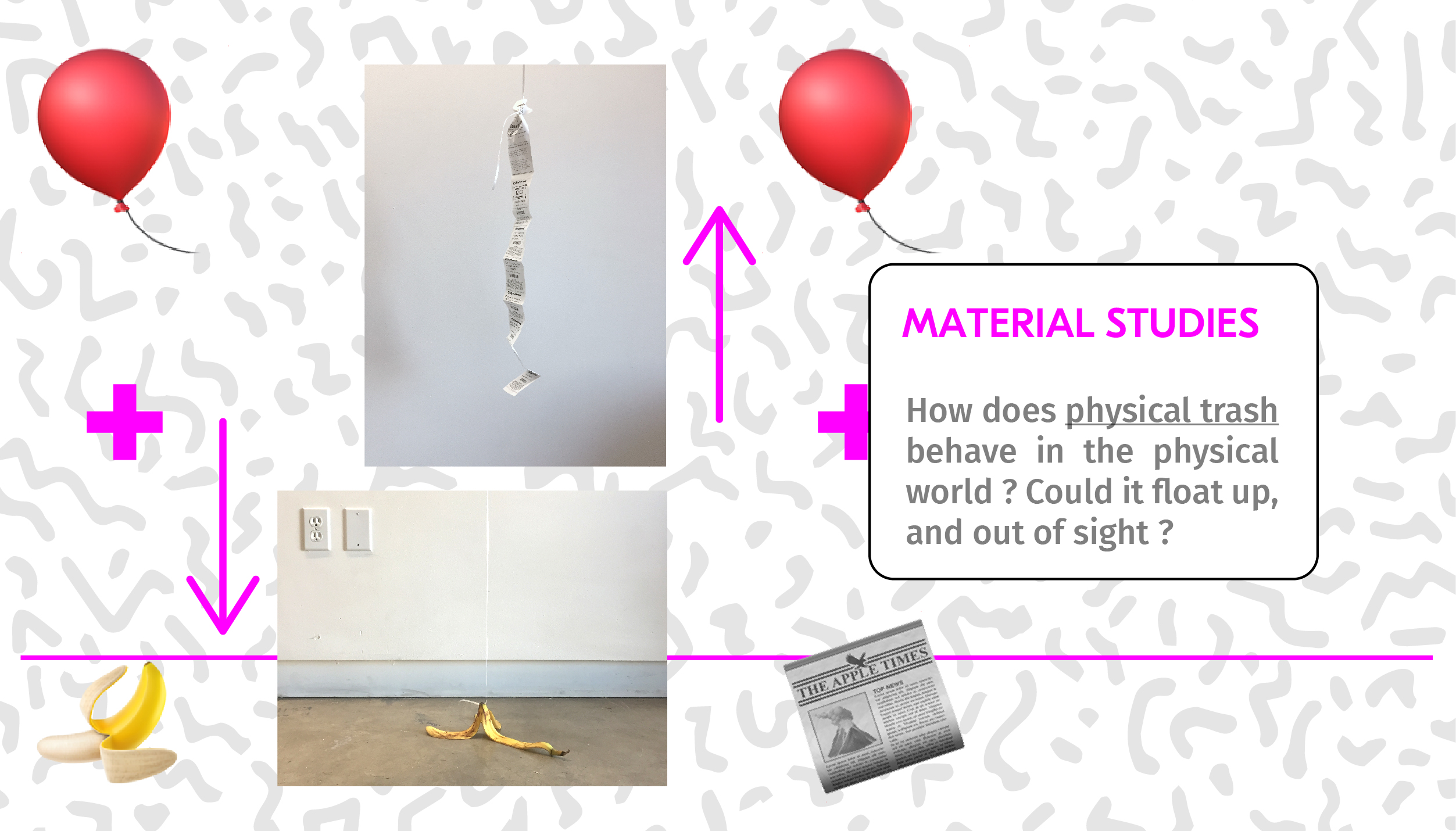

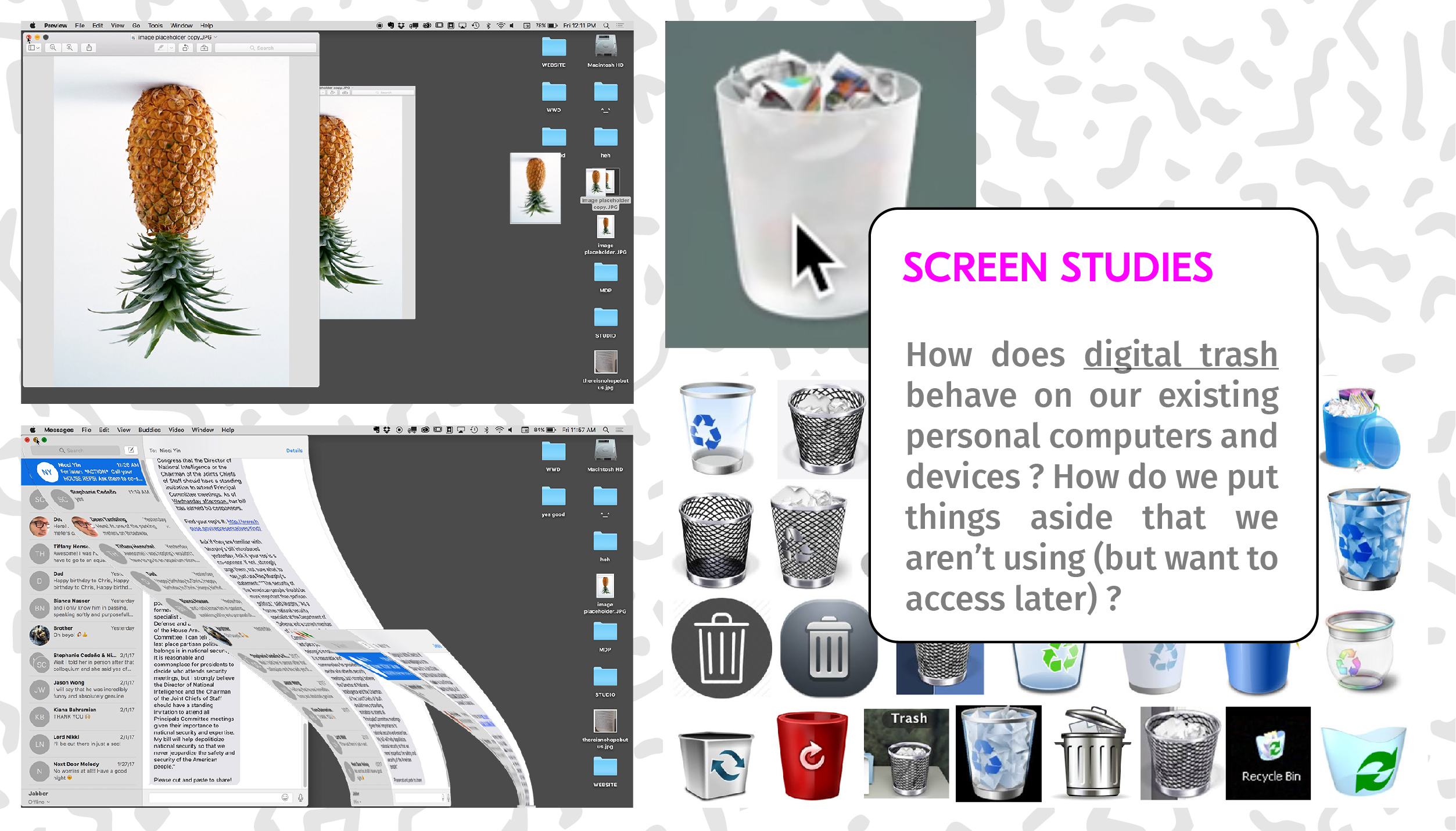

Phase 02: SECONDARY RESEARCH

Given these insights from user research and technical research, we used them as a guide to imagine how people might have to renegotiate their home spaces in the context of trash and clutter in Mixed Reality. Our secondary phase of research was very exploratory, allowing us to dig into the primary research, and extrapolate on it using different methods and concepts.

Phase 03: SYNTHESIS

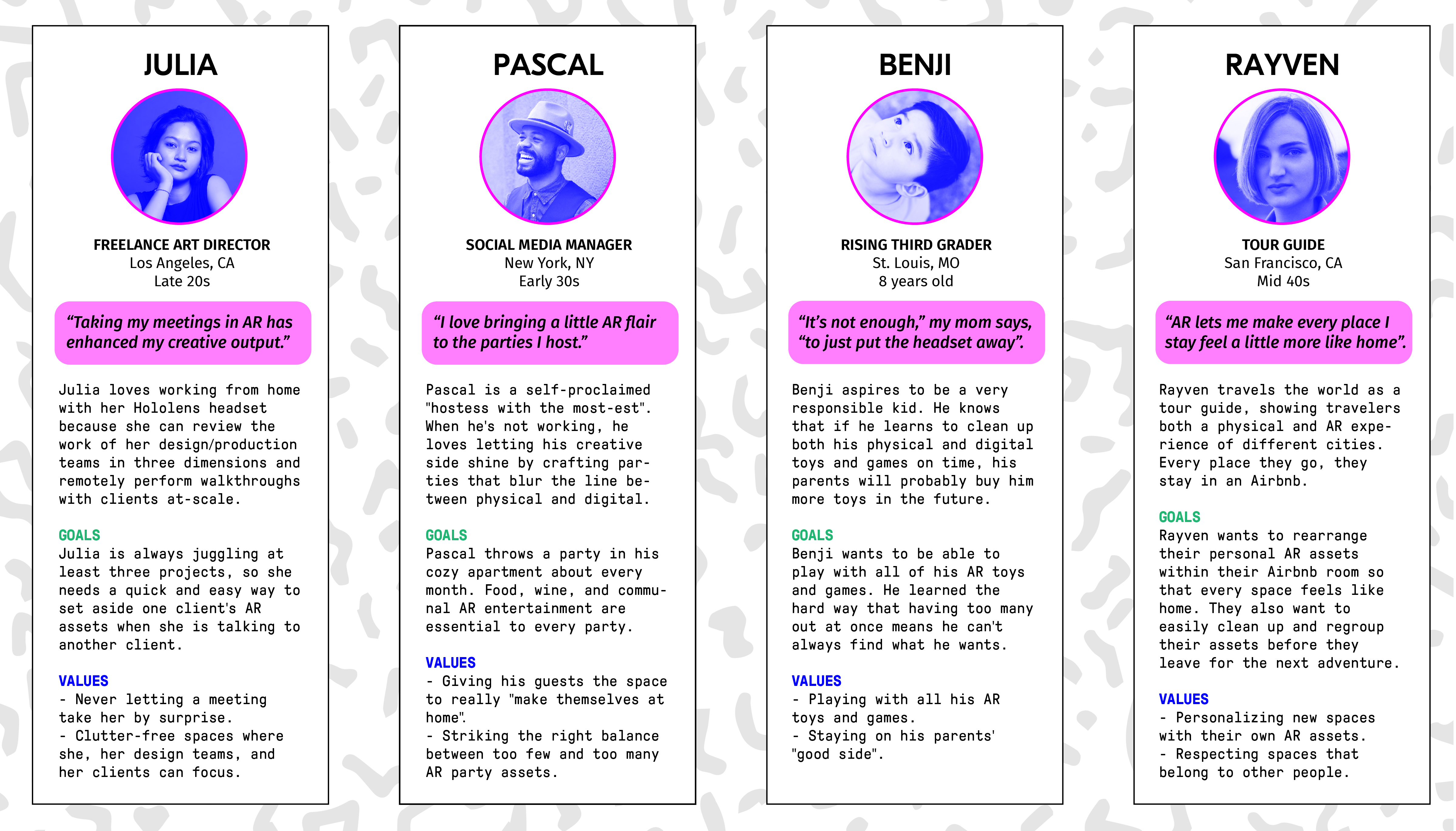

USER PERSONAS

After branching out in our secondary phase of research, we refocused by creating four personas that synthesize the insights from our user research with the insights from our technical and conceptual research, keeping in mind the affordances of the Hololens and how those might integrate with how people normally live their lives at home, outside of Mixed Reality.

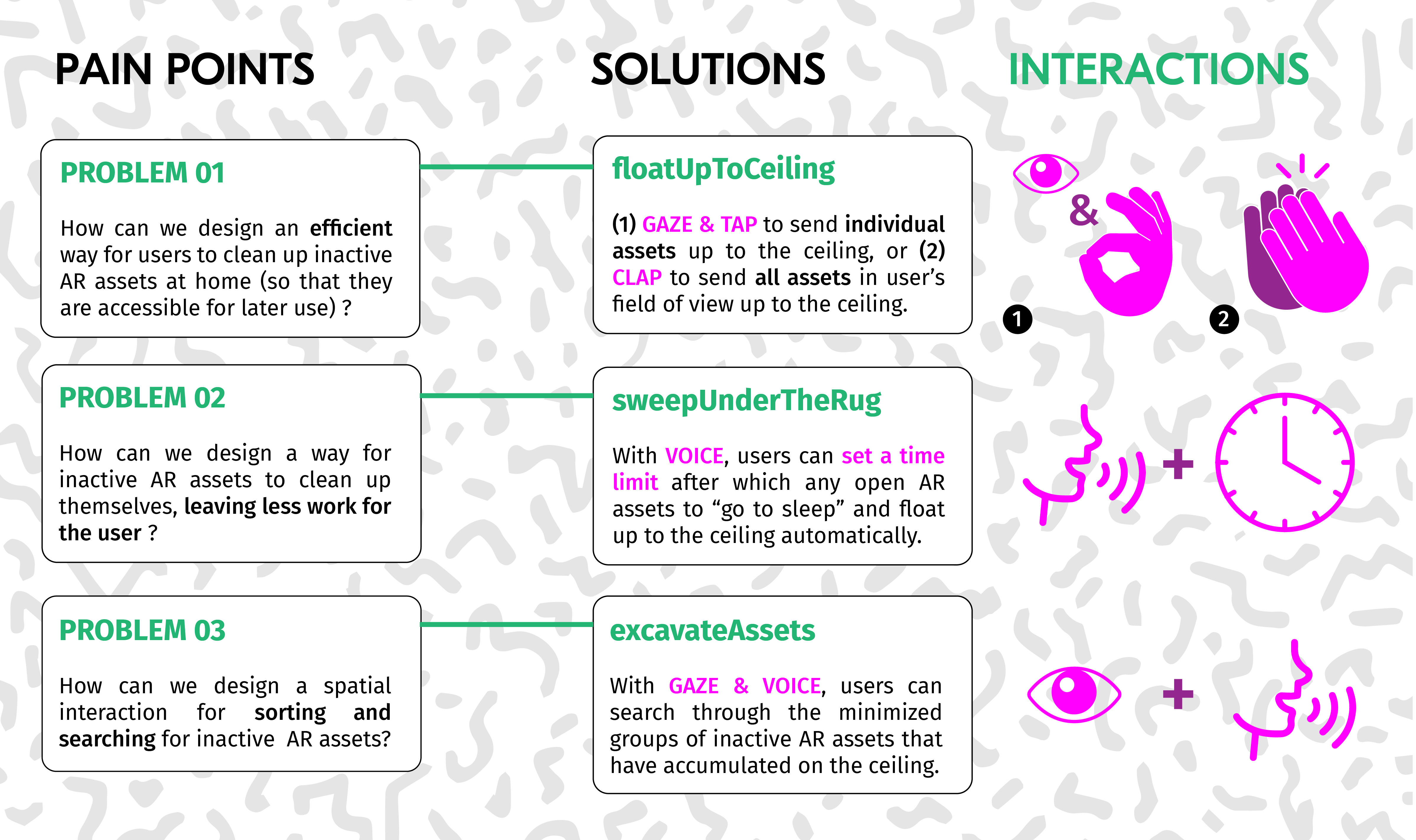

PAIN POINTS + SOLUTIONS

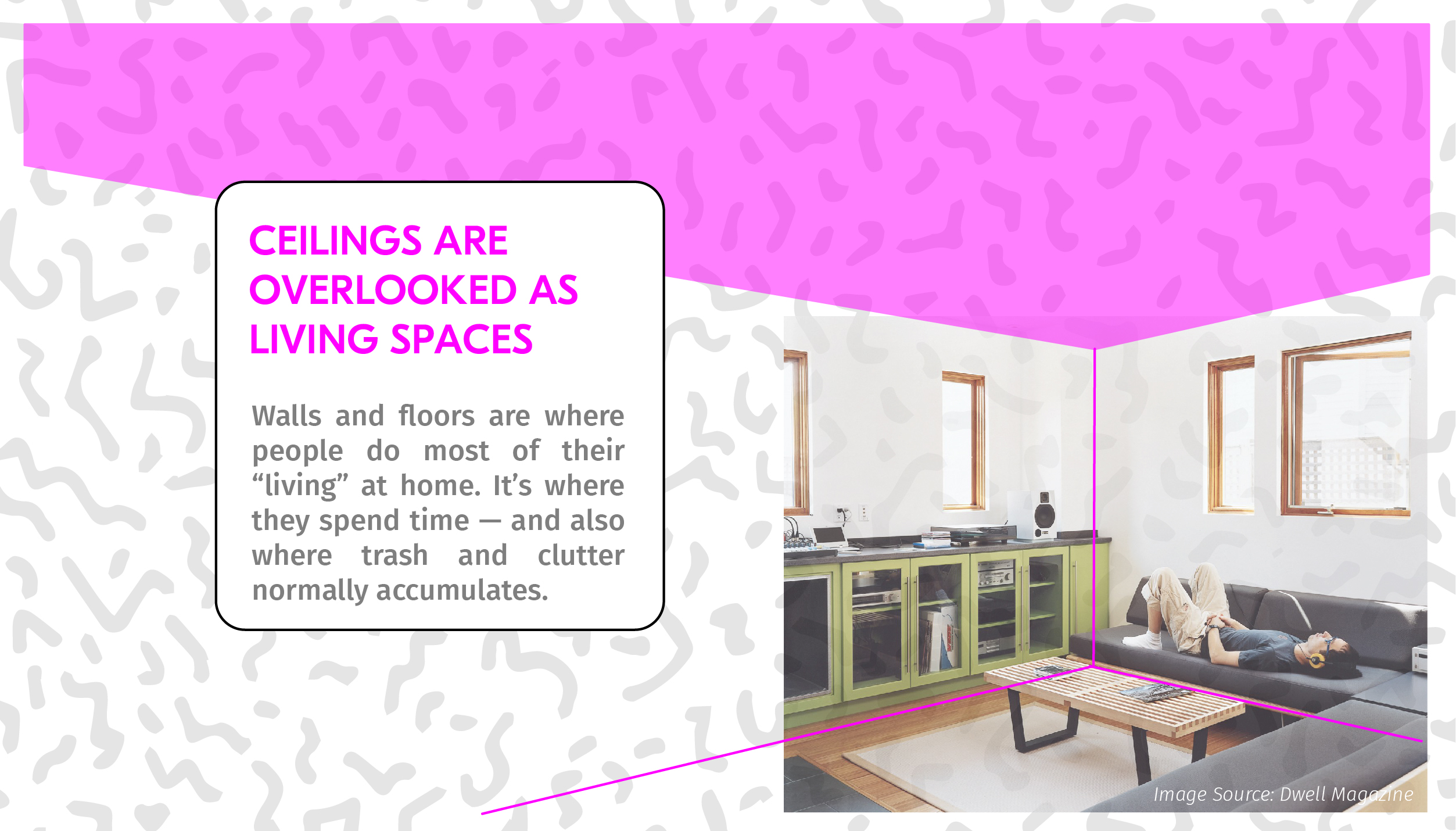

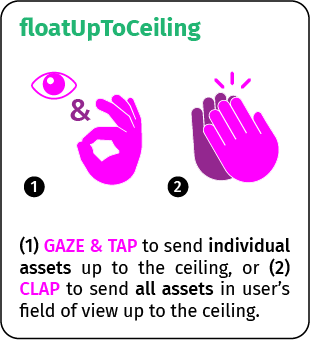

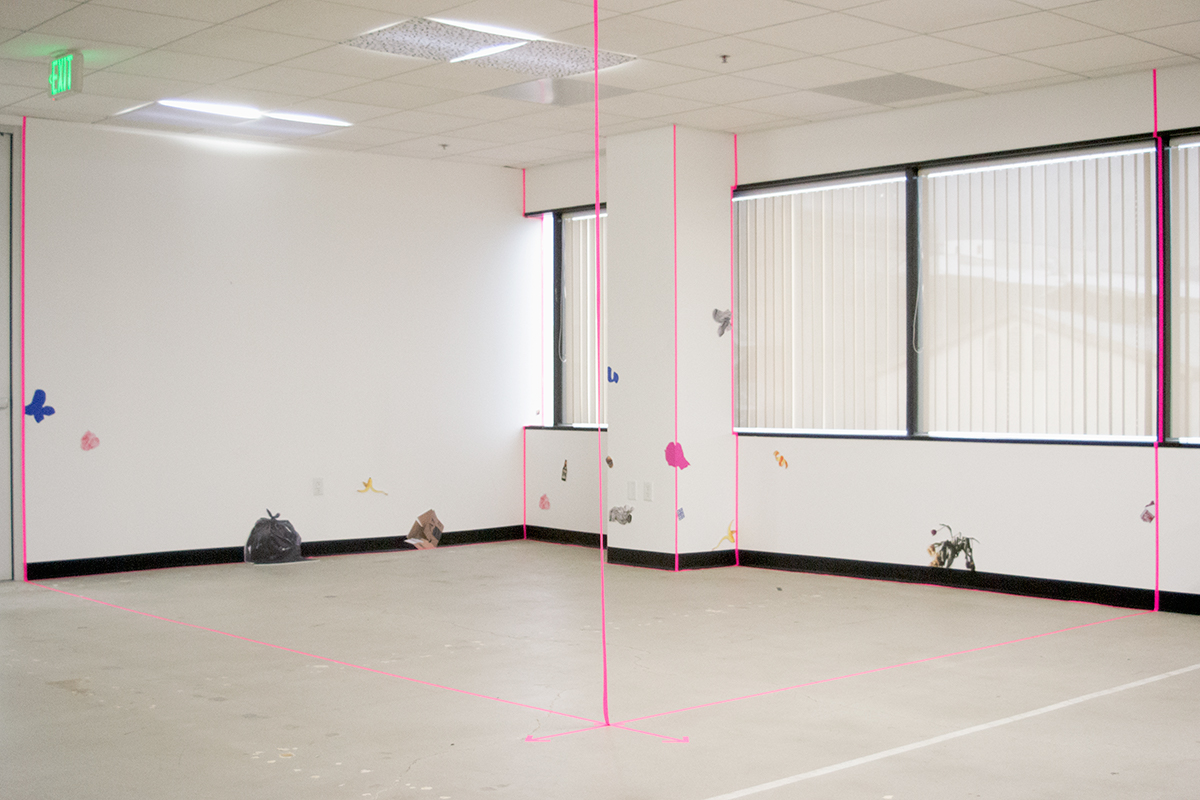

If the ceiling is an inactive space in most homes, we can leverage it as a way to deal with “trash” in Mixed Reality. Inactive AR assets can float up and out of the way to the physically unoccupied and overlooked space of the ceiling. People can use the physical space of the home without the visual clutter of holograms and apps that they aren’t using at the moment. The ceiling also serves as a kind of “storage space”, from which users can access inactive AR assets at a later time.

Phase 04: TAKING OUT THE TRASH

Our new set of interactions propose three solutions:

![]()

Although we proposed two kinds of gestures for the floatUpToCeiling interaction, we we only able to prototype the GAZE + TAP gesture. The CLAP gesture requires custom gesture inputs.

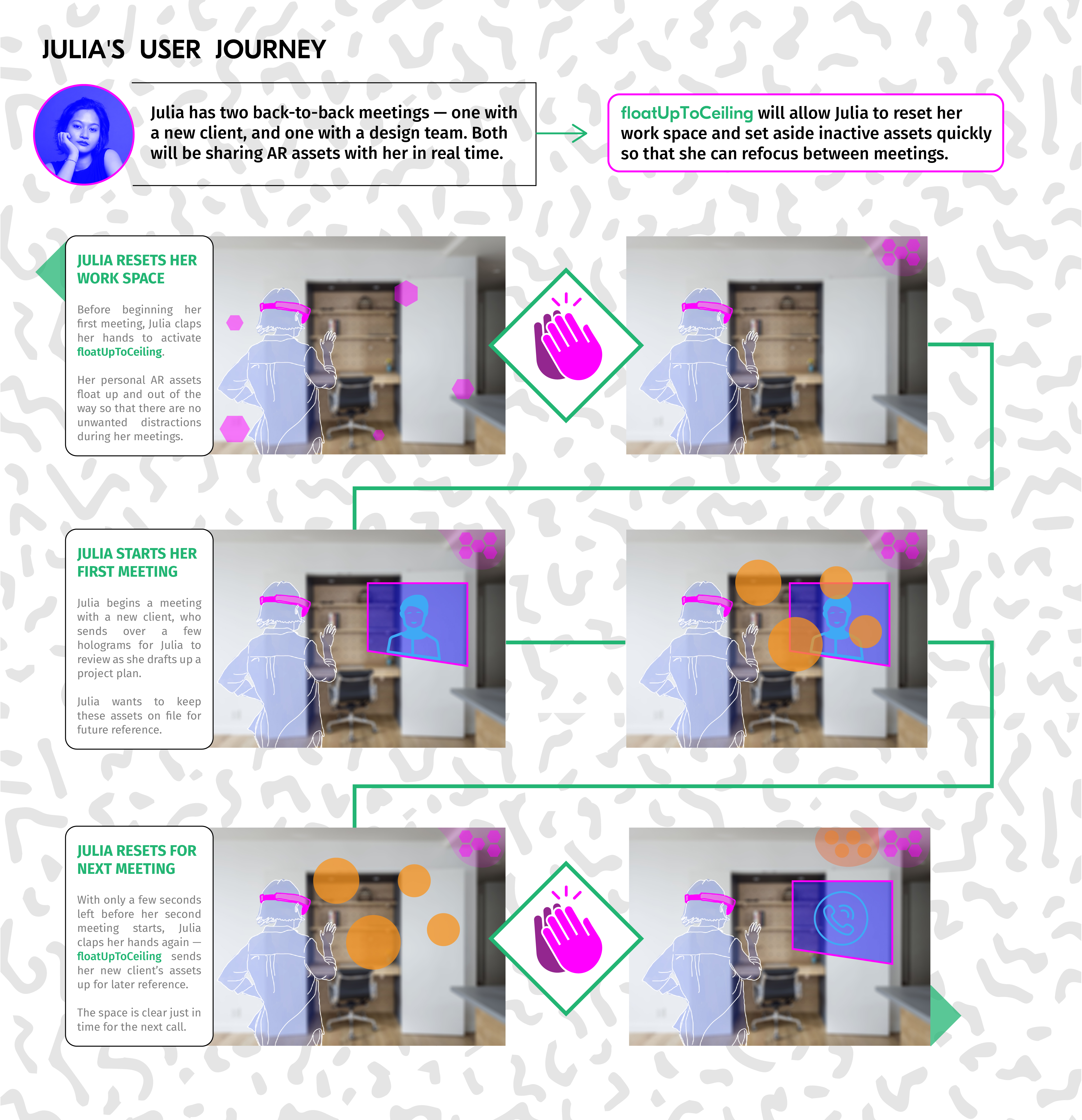

To at least give a sense of how the CLAP gesture would work, however, we created a user journey map where one of our personas uses that gesture / interaction to address her AR clutter-related problem.

1. floatUpToCeiling

Although we proposed two kinds of gestures for the floatUpToCeiling interaction, we we only able to prototype the GAZE + TAP gesture. The CLAP gesture requires custom gesture inputs.

To at least give a sense of how the CLAP gesture would work, however, we created a user journey map where one of our personas uses that gesture / interaction to address her AR clutter-related problem.

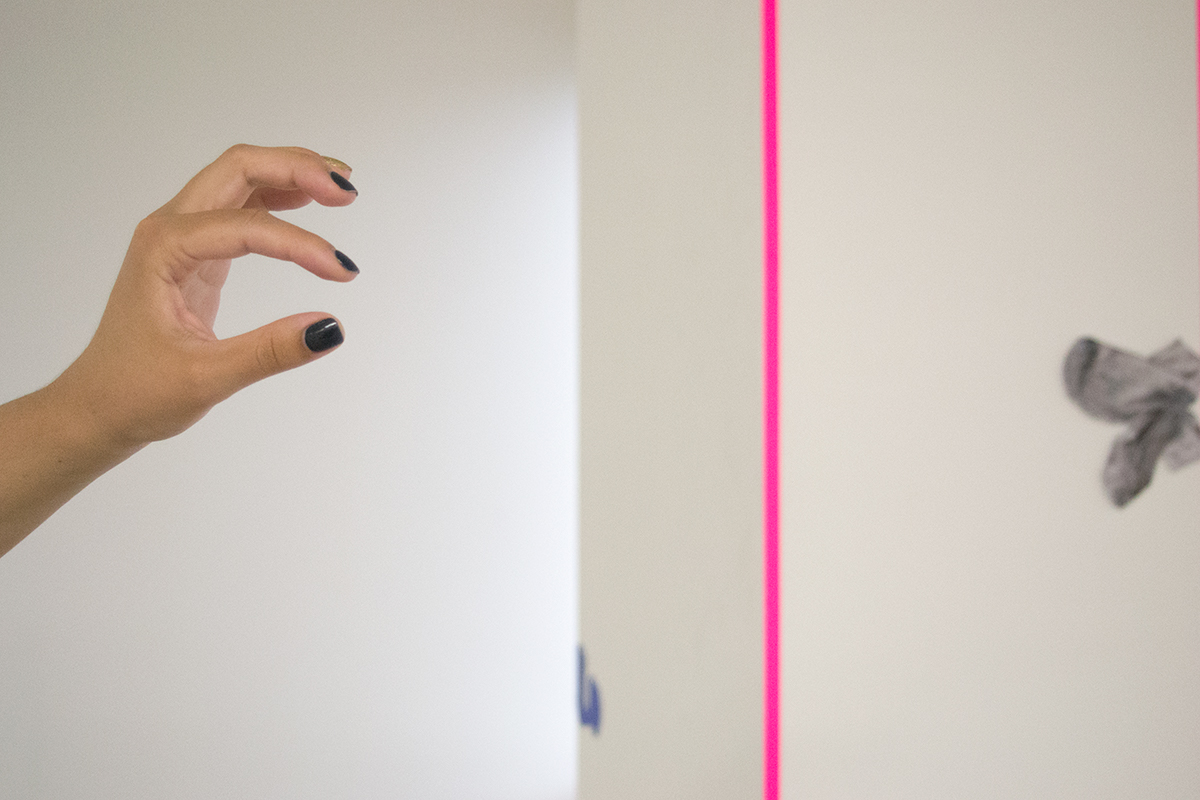

↳ The physical installation space (i.e. the “home” in which our demos take place).

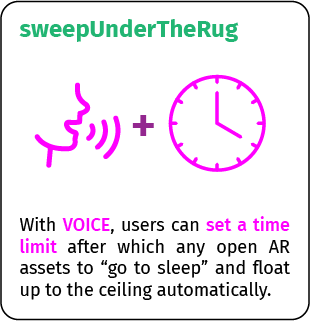

2. sweepUnderTheRug

Although we did not prototype this interaction directly in the Hololens, we created a physical installation space, and assembled a collection of “sleeping” holograms in the ceiling of that space to show how inactive holograms might gather and cluster when not in use.

3. excavateAssets

We created a Hololens demo that allows a user to scan through an accumulation of holograms and other AR assets on the ceiling, using their gaze. We did not prototype the voice component of this interaction, but we imagine that anything that the user can “see” can be retreived.